Abstract

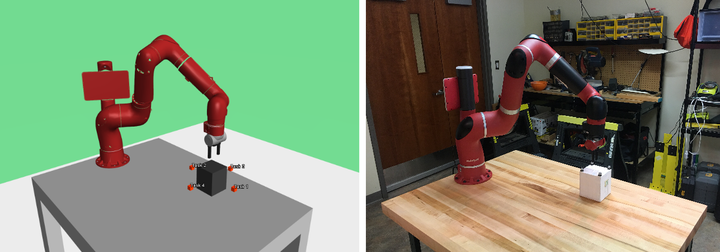

We present a strategy for simulation-to-real transfer, which builds on recent advances in robot skill decomposition. Rather than focusing on minimizing the simulation-reality gap, we learn a diverse set of skills and their variations, and embed those skill variations in a single continuously-parameterized space. We then interpolate, search, and plan in this space to find a transferable policy which solves more complex, high-level tasks by combining low-level skills and their variations. We first characterize the behavior of this learned skill space, by experimenting with several techniques for composing pre-learned latent skills. We then discuss an algorithm which allows our method to perform long-horizon tasks never seen in simulation, by intelligently sequencing short-horizon latent skills. Our algorithm adapts to unseen tasks online by repeatedly choosing new skills from the latent space, using live sensor data and simulation to predict which latent skill will perform best next in the real world. Importantly, our method learns to control a real robot in joint-space to achieve these high-level tasks with little or no on-robot time, despite the fact that the low-level policies may not be perfectly transferable from simulation to real, and that the low-level skills were not trained on any examples of high-level tasks. In addition to our results indicating a lower sample complexity for families of tasks, we believe that our method provides a promising template for combining learning-based methods with proven classical robotics algorithms such as model-predictive control (MPC).