Heterogeneous Sensor Fusion via Confidence-rich 3D Grid Mapping: Application to Physical Robots

Abstract

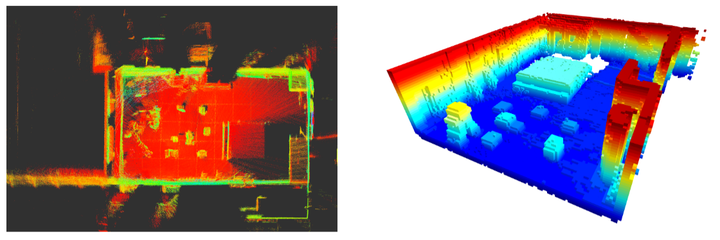

Autonomous navigation of intelligent physical systems largely depend on the ability of the system to generate an accurate map of its environment. Confidence-rich grid mapping algorithm provides a novel representation of the map based on range data by storing richer information at each voxel, including an estimate of the variance of occupancy. Capabilities and limitations are attributes of any given sensor, and therefore a single sensor may not be effective in providing detailed assessment of dynamic terrains. By incorporating multiple sensory modalities in a robot and extracting fused sensor information from them leads to higher certainty, noise reduction, and improved failure tolerance when mapping in real-world scenarios. In this work we investigate and evaluate sensor fusion techniques using confidence-rich grid mapping through a series of experiments on physical robotic systems with measurements from heterogeneous ranging sensors.