Autonomous navigation

© CoSTAR

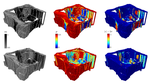

© CoSTARI have worked on autonomous robot navigation involving quadrotors that build a map of the environment while coping with perception uncertainty. In Confidence-rich grid Mapping (CRM), we extended the commonly used voxel-based robot mapping algorithm to account for the interdependence of voxels that are updated from the same depth measurements, leading to more accurate uncertainty predictions compared to previous works. Such map representation gives rise to new trajectory generation algorithms that account for the perception uncertainty.

To this end, in Planning High-speed Safe Trajectories in Confidence-rich Maps we propose a trajectory optimization algorithm that maximizes the Lower Confidence Bound that trades off the expected value of reachability of the current solution candidate versus the estimated uncertainty of such reachability estimate. The resulting trajectories exhibit motions where the robot is preemptively moving itself toward areas of the environment from which it has a steady perception of the upcoming segment of the path to follow.

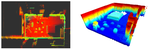

In collaboration with JPL, where I spent the summer 2018 as an intern under the guidance of Ali-akbar Agha-mohammadi, I extended the mapping solution to fuse measurements from multiple robots equipped with heterogeneous sensors, such as stereo cameras and LIDARs, in Heterogeneous Sensor Fusion via Confidence-rich 3D Grid Mapping: Application to Physical Robots. The map updates are combined efficiently in real time and their respective probabilistic measurement models determine the noise characteristics through which CRM calculates the voxel updates. In our experiments, we demonstrated the mapping framework working in tandem on the Intel Aero drone equipped with a RealSense depth sensor and a downward-facing 2D LIDAR, plus a novel wheeled robot that is equipped with two planar LIDAR sensors that spin as the wheels rotate.

Being affiliated with JPL, I contributed to the perception stack of team CoSTAR (JPL/Caltech/MIT/KAIST/LTU) in the DARPA SubT Challenge where ensembles of heterogeneous robots were tasked to explore and map underground tunnels and mines under degraded visual conditions.